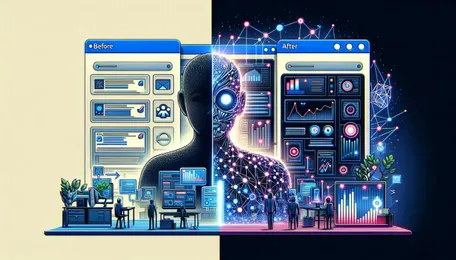

9elements x DISROOPTIVE x AI: Revolutionizing Workflow Efficiency

Innovating a company from within is a complicated task. You need to identify painpoints in your current processes, you need to create benchmarks so that you can validate changes and you need to have hypotheses to get a gut feeling which are the most important things to work on and to prioritize.

This is why we helped to build the DISROOPTIVEs web application. We digitalized the process in all of its facets and help you to document and keep track of your goals.

DISROOPTIVEs web application is focussing on finding innovative solutions for their customers in a multi-step process which involves gathering data from various regions of the world (wide web) to do exactly that: identify painpoints, benchmark companies and how they improved, create hypotheses for the customer solutions and many more. The application itself featured a sophisticated realtime multi-user environment and every step of the way also needed user-input.

The next Frontier

With the rise of ChatGPT (and LLMs in general) our client had the plan to raise the bar even further. The way LLMs are able to reason about tasks has increased fantastically and we can just recommend every company to investigate every digitalized process to figure out if it can be fundamentally improved.

For DISROOPTIVE we had the following goals:

Let the LLM figure out the painpoints about your processes just from providing the project briefing / mission statement.

Make suggestions about possible benchmarks.

Derive customized hypotheses automatically.

Though a LLM won't replace your chief digitalization officer you'll be surprised about the answers. With some little prompt engineering we managed to create unconventional thoughts but more important the LLM helped us not to forget the basics.

The client had dabbled with ChatGPT before, so he knew already that the model is in principal capable of providing desired output for each of the steps mentioned above. It was only a question of how the solution would look and feel like.

The first Painpoints

I'm making this sound bad, but like stated above the first goal actually was to have painpoints generated only being given the initial project briefing. So we grabbed an OpenAI API key, placed a button in the frontend and hooked it up to have the backend feeding the prompt to OpenAI's GPT model. Since our data model was already existent we knew what properties the answer should contain, and since we wanted multiple painpoints in a single go, we asked exactly that.

Ask and you shall be given

Following the initial problem description the prompt contained "Answer in valid JSON format like this: [{ key: 'string', values: [string, string, string]},{ key: 'string', value: [string, ...], ..." } ". Checking the API responses showed that the answer was structured in the requested way so we could easily built on top of that and create some database records that get attached to the project. The first step of the way was already complete. It was only a rather raw implementation at first but it worked like a charm. Well most of the time...

I said JSON, damnit!

Turns out that, from time to time and rather rarely, the answer wasn't JSON. Sometimes just a string, sometimes just an array of strings. Explicitly reminding the model of the RFC8259 JSON format somewhat fixed the issue but ever since the release of GPT-4 Turbo and its introduction to response_format AKA "JSON-Mode", this problem hasn't surfaced anymore. So everything looked promising, we had a functioning prompt for generating painpoints grouped thematically which lead to database records that enriched an in app project based only on the information provided by the project briefing. Great! We went on to build a little dashboard section where the prompts and number of requested objects could be edited by the admin, leaving only the system prompts regarding the desired response structure hardcoded as the system relied on it.

Uh, that leaves a benchmark

The next step involved the generation of benchmarks. Benchmarks against other companies were originally rather research intensive. 'What companies are there, what they do and where they excel at and how this could be useful to the current problem at hand'. And this time, instead of just transforming a given text into rather short and precise painpoints, GPT was prompted to provide knowledge on said question.

Ask for more and you shall be given more, way more

This time the prompt got more sophisticated as was the underlying data model but the basic principle of "Answer in valid JSON, put everything in an array" remained. Checking the first response revealed all the information necessary to create bechmarks in the database. Great again! But this time, we noticed an significant increase in response time. Triggering the process in the frontend, to having the benchmarks show up started to take minutes. The response time for the painpoints was laughably low. but it involved only a couple of words or sentences at best per item. Benchmarks can feature multiple paragraphs, this is on a completely other level in terms of output. Once the response was processed and stored in the database the result looked great, but from the user perspective it kind of felt odd, to wait so long for a response, while in contrast ChatGPT starts to answer immediately.

Gently down the stream

Being reminded by ChatGPTs streamed responses in the browser, we started to look into streaming the API response aswell. Upon sending the request, we saw an immediate response starting with "[", then "{" and it only took a couple of seconds until the first "}" had been streamed back to us. Ofcourse the stream continued, presenting the next objects, each starting and ending with the curly brackets. The next time we ran this, the code was modified to check for a pair of opening and closing curly brackets, cut out everything between them, parsing it as JSON and creating the record. All while the streaming continued in the background and the next pair of curly brackets is identified and processed. The result? Instead of waiting a couple of minutes for the complete response with a number of benchmarks, we manged to deliver benchmarks in a couple of seconds to the user and while he's busy checking out the first update the next one is nearly done being processed aswell, leading to a much smoother experience.

Visual communication

The last and final step involved the combination of the previous outputs into a new one, so we built another endpoint that took the recipie and ingredients, prompted GPT and processed the response on the fly, this time assisted by Dall-E to create some visual representation of the result. And while Dall-E 2 failed to amaze, Dall-E 3 is a huge leap forward in quality, rivaling Midjourney and the likes but already providing an API.

The Impact

Seeing GPT-4 and now GPT-4 Turbo at work is just incredible. And DISROOPTIVE's use case seems exceptionally suited for AI assistance, removing the grunt work and allowing the users to focus on making strategic decisions, revolutionizing the workflow.

Are you interested in what DISROOPTIVE is doing? Do you have a project that could benefit from AI integration? Or do you want to build something from the ground up? We'd love to hear from you! Get in touch with us!