Blending AI and Artistry: A Journey in Digital Creation for a Fictional Movie Poster

There are many AI tools for image generation, and it seems like a new one is added every day. The results are often very impressive, yet they can also feel quite random. I wanted to try what I could achieve by setting a clear goal and striving to reach it.

My idea: a movie poster for a fictional sequel to Disney's live-action adaptation of The Little Mermaid. The main character should be a blend of King Triton and the sea witch Ursula.

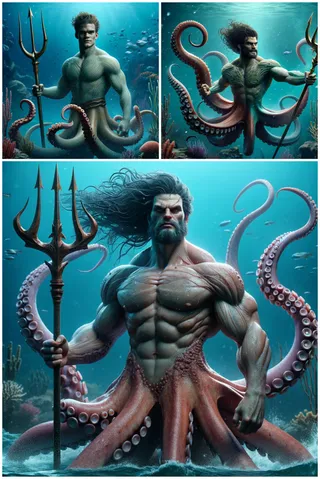

I initially tried with Midjourney. The images are impressively "real," but I couldn't manage to create a hybrid human-octopus character with a human upper body and an octopus lower body.

So, I turned to DALL-E by OpenAI. Here, it was much easier to create images that were closer to what I wanted. However, the results are much more artificial, resembling the graphics of video games.

After seeing these images, I thought we might not be there yet, or at least my skills weren't sufficient to create a photo-realistic image with the desired content. A few days later, my social media timeline was flooded with posts about Magnific AI; an upscaling tool that enlarges AI-based images while adding details. So, I revisited my merman creations to see if I could enhance the already created images. And I must say, the result was mind-blowing for me. Not only were the original images doubled in size from 1024px in width to 2048px, but amazing details were also added, making the image immediately appear much more realistic (as realistic as one can get with a merman with tentacles. 😅).

My enthusiasm was reignited. Although I found the result very impressive, a closer look revealed some flaws:

He appears to be both under water and in the water.

The tentacles seem to emerge from nowhere and don't appear to be connected to a body.

An eye is growing on his left upper arm.

...

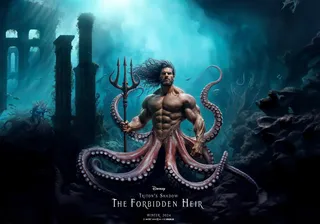

From there, I began working with Photoshop. I first cropped the lower part of the image, and then had the entire image expanded. While Photoshop's in-painting options have not been convincing to me, the outpainting features sometimes yield very impressive results. This allowed me to extend the tentacles downward and give the entire scene more space on all sides.

After enlarging the image twice, I was working with a resolution of over 4000px in both width and height. Unfortunately, the background generated with Photoshop was always somewhat blurry. So, I used DALL-E and Magnific AI again to create a sunken underwater city. I was quite impressed with the result.

I then rather amateurishly incorporated part of this image into my existing one. With more time and talent, one could certainly have created a good montage using classic Photoshop skills. However, I used my basic montage, halved its size, and then enlarged it again with Magnific AI.

From this point, it was a mix of classic Photoshop retouching processes and AI-supported modifications. I smoothed out irregularities, adjusted skin tones, and added more details to the background. Finally, the movie title was missing, which I created in Figma and then integrated into the existing file.

In conclusion, I can say that I am very satisfied with the result. I couldn't have created such a poster without AI support. However, it's important to note that a lot of work went into this as well. The necessary effort will surely decrease significantly in the future, but it's very important to understand the strengths and weaknesses of the tools we currently have at our disposal.